Recently, Matthew Chingos and Kristin Blagg of the Urban Institute published

a blog post about the rise in national test scores for schools in Washington, DC. The post has been

republished elsewhere and, consequently, cited frequently in the edu-bloggosphere and press.

I have four specific objections to their post, which I'll lay out below (from least to most technical). But let me start by saying I don't think Chingos or Blagg are hacks; far from it. I want to engage on what they wrote because I think it's important and I think they are serious. My

tweeting yesterday could easily be interpreted as my saying otherwise, and for that, I apologize.

However...

We now live in an atmosphere where, far too often, education research is being willfully misrepresented by people with ideological, personal, or other agendas. As I've said many times here, I don't pretend that I don't have a point of view. But I also work hard to keep the evidence I cite in proper context.

Others, like

Michelle Rhee and

Jonathan Chait, have no such qualms. It is, therefore, far past time for public intellectuals and researchers to start asking themselves what responsibilities they bear for the willful misuse of their work. For example...

Objection #1: The framing of the research. I realize this was just a blog post, and that the standards of a peer-reviewed article don't apply here. But I also understand that Urban is an influential think tank with an

extensive communications shop, so anything they put out is going to get traction. Which is why I have a problem with starting the post with this paragraph:

Student performance in the nation’s capital has increased so dramatically that it has attracted significant attention and prompted many to ask whether gentrification, rather than an improvement in school quality, is behind the higher scores. Our new analysis shows that demographic change explains some, but by no means all, of the increase in scores.

Chingos and Blagg have got two big, big assumptions laid out right at the top of their post (I'll be referring to

this version from Urban Institute's website throughout; all emphases are mine):

- Student performance in DC has "increased so dramatically."

- The two factors that influence test scores are gentrification -- in other words, changes in the demographic composition of DC's student body -- and improvements in school quality.

The fact is, as I show below, there is at least one other factor that has contributed significantly to changes in DC test scores, and there are almost certainly others as well.

Continuing directly:

The National Assessment of Educational Progress (NAEP), known as the “nation’s report card,” tests representative samples of fourth- and eighth-grade students in mathematics and reading every two years. NAEP scores reflect not just school quality, but also the characteristics of the students taking the test. For example, the difference in scores between Massachusetts and Mississippi reflects both the impact of the state’s schools and differences in state poverty rates and other demographics. Likewise, changes in NAEP performance over time can result from changes in both school quality and student demographics.

The question, then, is whether DC’s sizable improvement is the result of changing demographics, as some commentators claim, or improving quality. Our analysis of student-level NAEP data from DC, including students from charter and traditional public schools, compares the increase in scores from 2005 to 2013 with the increase that might have been expected based on shifts in demographic factors including race/ethnicity, gender, age, and language spoken at home. The methodology is similar to the one used in a new online tool showing state NAEP performance (the tool excludes DC because it is not a state).

We are now three paragraphs in and the authors have

repeatedly set up a particular research framework: changes in the NAEP are due either to changes in student demographics or improving quality in schools.

Now, as I said above, Chingos and Blagg are not hacks. Which is why they admirably explain, in detail, why they did not include the most important student demographic factor affecting test scores -- socio-economic status -- in their analysis.

But it's also why, in the third-to-last paragraph, they write this:

The bottom line is that gentrification alone cannot explain why student scores improved in Washington, DC, a conclusion that echoes previous analyses using publicly available data. DC education saw many changes over this period, including reform-oriented chancellors, mayoral control, and a rapidly expanding charter sector, but we cannot identify which policy changes, if any, produced these results.

Go ahead and read

the entire post; you'll find that "if any" clause is the

only time they suggest there may be other factors influencing DC's NAEP scores besides student characteristics and school quality.

Am I picking nits? I suppose that's a matter of debate. But when you write a post that's framed in this way, you shouldn't be the least surprised when an education policy dilettante like

Jonathan Chait picks up on your cues:

But here is an odd thing that none of these sources mention: Rhee’s policies have worked. Studies have found that Rhee’s teacher-evaluation system has indeed increased student learning. What’s more, the overall performance of D.C. public school students on the National Assessment of Education Progress (NAEP) has risen dramatically and outpaced the rest of the country. And if you suspect cheating or “teaching to the test” is the cause, bear in mind NAEP tests are not the ones used in teacher evaluations; it’s a test used to assess national trends, with no incentive to cheat. (My wife works for a D.C. charter school.)

Some critics have suggested that perhaps the changing demographics in Washington (which has grown whiter and more affluent) account for the improvement. Kristin Blagg and Matthew Chingos at the Urban Institute dig into the data, and the answer is: Nope, that’s not it. The amount of improvement that would be expected by demographic change alone (the blue bars) is exceeded by the actual improvement (the gray bars): [emphasis mine]

We'll save the claims about Rhee's

innumerate teacher evaluation system for another day. Does it look like "

if any" was enough to stop Chait from making a way overblown claim about Rhee's policies being the driver of DC's test score rise? Read the whole thing: does it seem to you that Chait is willing to entertain any other possibilities for DC's "dramatic" rise in NAEP scores? And are you at all surprised he used Blagg and Chingos's post the way he did,

given how they wrote it?

Michelle Rhee, former superintendent of the DCPS, couldn't wait to get in front of a camera and use Blagg and Chingos's post

to justify herself:

(0:20) Well, over the last decade really, the scores in DC have outpaced the rest of the nation. In fact, over the last few years, the gains that DC kids have seen have actually led the nation.

We'll also set aside Rhee's claims about

"school choice" in DC for now and, instead, point out that at least she is willing to compare DC's "gains" to other systems. Her comparison is, as I'll show below, highly problematic, but at least she's willing to acknowledge that a "dramatic" gain must be viewed in relative terms. (I'm also going to stay away from her response to the allegations of cheating in DC; I'm sure others

with more knowledge about this topic will weigh in.)

Rhee, however, is also not willing to acknowledge that DC's gains might be due to factors other than student characteristics or policy changes.

(2:43) And then people said, well it was because of shifts in demographics; you know, basically meaning more white kids are coming in. And this most recent study last week showed that was not the case either. And so it's high time people realized, no, actually, poor and minority kids can learn if they have great teachers in front of them every single day and if families have school choice and are allowed to choose the programs that are serving their kids best. If you put those dynamics in place, wow, kids can actually learn.

You'll notice how Rhee conflates "poor" and "minority" students, even though Blagg and Chingos did not address socio-economic status directly in their analysis.* Nowhere in this interview, however, does Rhee acknowledge there may be others factors involved in the rise of DC's NAEP scores.

Let me be clear here: Blagg and Chingos did not put words into Rhee's mouth or Chait's blog. But they know know how the debate is being conducted now; they know folks like Rhee and Chait are out there waiting to pounce on this stuff. Why, then, frame your conclusions this way? Why limit your cautions to a mere "

if any" when you know for certain someone is going to take your stuff and use it for their own ends?

At some point, you have to own at least some of the consequences of putting your research into the public sphere in a particular way. At the very least, you need to point out when it's being abused. Will Blagg and Chingos do that? I hope so.

* * *

This next part is more technical. Skip down if you're not interested.

Objection #2: Where are the secular effects? Bob Somerby of

The Daily Howler is usually not included in the rolls of the edu-bloggosphere, as much of his work is media criticism. But he's been writing about the abuse of NAEP scores and other education topics for a long time.

Here, he addresses Chait's post about Blagg and Chingos, making a rather obvious point:

Truly, this is sad. Chait accepts the Urban Institute's unexplained demographic projection without even batting an eye. Incomparably, we decided to do something which made a bit more sense:

We decided to compare DC's score gains during that period to those recorded in other big cities. Our decision to run this simple check required almost no IQ points.

Duh. As everyone knows except New York Times readers, NAEP scores were rising all over the country during the years in question. To simplify the demographic confusion, we looked at how DC's black kids did during that period, as compared to their peers in other cities.

In what you see below, we're including every city school system which took part in the NAEP in 2005 and 2013. As you can probably see, the score gain in DCPS was remarkably average:

Growth in average scores, Grade 8 math

2005-2013, NAEP, black students only

Atlanta 19.78

Los Angeles 16.72

Boston 15.17

Chicago 14.29

Houston 13.23

DCPS 11.48

Charlotte: 7.83

San Diego: 7.39

New York City 5.82

Cleveland: 5.34

Austin 4.85

For all NAEP data, start here.

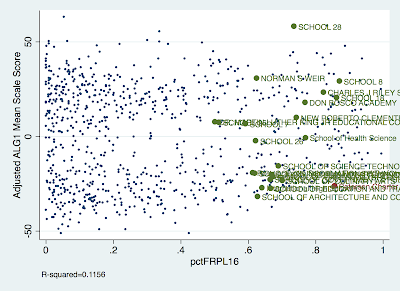

Let me take this a bit further. Here are some quick and dirty graphs using NAEP scores as taken from the

Trial Urban District Assessment (TUDA), and the national NAEP. Understand, we have to approach these with great caution: I haven't done anything to adjust for student characteristics or a myriad of other factors that influence test scores. But this is good enough to make a larger point.

Here are the average scale scores for all students on the Grade 4 Reading NAEP test. No question, DC's score rose -- but so did the scores of many other cities. Even the nation as a whole rose a bit during the time from 2003 to 2013.

Economists will sometimes call this a secular effect.** The basic idea is that if you're going to look at a gain for a specific individual, you have to take into account the gains across all individuals. In other words: it's just not warranted to say a gain is "dramatic" unless you put it into some sort of context. You must ask: how do DC's gains stack up against other cities' gains? Other states?***

Maybe Blagg and Chingos don't have the data to run this analysis. OK -- then don't say the gains are "dramatic" or "sizable." But even if you do a comparative analysis and you conclude they are, you have to deal with another issue:

Objection #3: Equating gains on different parts of a scale. Again, here's

Somerby:

How well did DC's eighth-graders score in math in 2013, at the end of Rhee's reign?

In theory, it's easier to produce large score gains if you're starting from a very low point. DCPS was low-scoring, even compared to other big cities, when Rhee's tenure began. [emphasis mine]

As Rhee points out, DC scores did rise more relative to many other cities.

But gains at the low part of the score distribution are not necessarily equivalent to gains at the high part. It may well be easier to get gains when you're at the bottom, if only because you have nowhere to go but up.

This is one of the biggest problems with the claims of DC's "dramatic" test scores gains: it's hard to find another school district with which to compare it. Here, for example, are the Grade 8 Reading scores over the period in question:

Atlanta, Cleveland, and DC started in 2003 at close to the same place. Cleveland finished below DC, but Atlanta finished above. Los Angeles, meanwhile, which was below all three, rocketed above DC.

Does this tell us anything about policy changes and their effects on student outcomes? Can we confidently say LA rocks? Absolutely not; we didn't adjust for any student demographic changes or other factors -- but even if we did, we couldn't be sure what was causing the changes.

The point here, however, is that even with these crude, unadjusted measures, there is far more to the story than simply stating that DC has "dramatic" and "sizable" gains. And the gains of high-flyers like Boston and Charlotte can't be equated with the gains of cities on the lower part of the scale.

Again: I didn't see any cautions in Blagg and Chingos's work about this.

Objection #4: Assuming fixed demographic effects. This is going to get a bit technical, so bear with me. Somerby complains that Blagg and Chingos's methods are "unexplained." That's not really fair: they do include a link to a

NAEP analysis site from Urban that includes a

Technical Appendix that spells out the basic idea behind their score adjustments:

We performed adjustments by estimating regression coefficients using the student-level data from

2003, roughly halfway between the available periods for mathematics (1996–2013) and reading (1998–

2013). Specifically, we regress the test score of each student in 2003 on the set of control variables

described above.1 Control variables are included in the regression using dummy variables identifying each of

the groups of students for each construct, with the exception of the one arbitrarily chosen group that is the

omitted category (except for age, which is included as a continuous variable).

Using this regression, we estimate a residual for each student across all assessment years, which is the

difference between their actual score and a predicted score based on the relationship between the

predictors and test scores in 2003. We calculate the adjusted state score as the sum of the mean residual

and the mean score in the given test year (e.g., 1996, 1998, and so on), re-normed to the mean of the given

test year. Essentially, we perform an adjustment for each testing year, but we base the adjustment on the

relationship between NAEP scores and the control variables in 2003. [emphasis mine]

So here's the thing: this method is based on an assumption that the effects of student characteristics on test scores remain fixed across time. In other words: the effect of a student qualifying for free lunch (a proxy measure of SES) in 2003 is

assumed to be the same for every test administration between 1996 and 2013.

But we don't know if that's true. If, for example, there is a peer effect that comes from changing cohort demographics over time, there may be a change in how a student's characteristics affect their test scores, even if the characteristics themselves don't change between individuals at different points in time.

Now, there's actually something a researcher can do about this, and Blagg and Chingos likely have the data they need. Rather than just regressing one cross section of test scores on student demographics, regress all the years you're studying but add year dummy variables and interactions with the demographic measures and years. That way, you'll see whether the effects of student characteristics change over time, and you'll get a better comparison between years.

In many cases you couldn't do this, because each interaction adds a covariate, which costs you another degree of freedom in your regression. But Blagg and Chingos are working with microdata, so their

n is going to be very high. Adding those interactions shouldn't be a problem.

Of course, it's quite possible doing all this won't change much. And you'd still have the problem of equating different parts of the scale. But I think this is a more defensible technique; of course, given that we're really in the weeds here, I'm open to disagreement.

* * *

Look, I don't want to equate my methodological qualms about Blagg and Chingos's post with the outright abuse perpetrated by Chait and Rhee. But I'm also not about to let Blagg and Chingos off the hook entirely for what Chait and Rhee did.

The sad truth is that the willing misapplication of education research has become a defining feature of our current debate about school policy. Is my "side" guilty of this misconduct? Sure; there's stuff out there that supports my point of view that I don't think is rigorous enough to be cited. Of course, my "side" hardly has the infrastructure of the "reformers" for producing and promoting research of questionable value in service of a particular agenda. Still, that doesn't excuse it, and I'll take a deserved hit for not doing more to call it out.

But it would be nice to be joined by folks from the other "side" who feel the same way.

Let me say something else about all this: there's actually little doubt in my mind that Rhee's policies did increase test scores. How could they not? Rhee had her staff

focused on test score gains pretty much to the exclusion of everything else. It's not far-fetched to imagine that concentrating on local test-taking carried over and affected outcomes on the NAEP.

But Rhee's obsession with scores trumped any obligation she may have had to create an atmosphere of collegiality and collaboration; that's borne out by the resounding rejection of Mayor Adrian Fenty, whose reelection was

largely a referendum on Rhee.

And that, perhaps, is the biggest problem with Blagg and Chingos's analysis -- our, more accurately, the entire test-based debate about education policy. I'm not saying test scores don't count for something... but they surely don't count for

everything. Was it worth breaking the DC teachers union and

destroying teacher morale just to get a few more points on the NAEP? Is anyone really prepared to make that argument?

One more thing: I was at a panel at the American Educational Research Association's annual conference this past spring about Washington DC's schools. The National Research Council presented a report on the state of a project to create a rigorous evaluation system for DC's schools.

It was, frankly, depressing. As this

report from last year describes, the district has not built up the data capacity necessary to determine whether or not the specific policies implemented in DC have actually worked. Anthony Bryk, a highly respected researcher, was visibly angry as he described the inadequacy of the DC data, and how no one seemed to be able to do anything about improving it.**** From the report:

The District was making progress in collecting education data and making it publicly available during the time of this evaluation, but the city does not have a fully operational, comprehensive infrastructure for data that meets PERAA’s goals or its own needs as a state for purposes of education. To meet these needs, the report says D.C. should have a single online “data warehouse” accessible to educators, researchers, and the public that provides data about learning conditions and academic outcomes in both DCPS and charter schools. Such a warehouse would allow users to examine trends over time, aggregate data about students and student groups, and coordinate data collection and analysis across agencies (education, justice, and human services).

To confront the serious and persistent disparities in learning opportunities and academic progress across student groups and wards, the city should address needs for centralized, systemwide monitoring and oversight of all public schools and students, fair distribution of educational resources across wards, ongoing assessment of strategies for improving teacher quality, and more effective collaboration among public agencies and the private sector. In addition, the committee recommended that D.C. support ongoing, independent evaluation of its educational system, including the collection and analysis of primary data at the school level. [emphasis mine]

When you live in a data hole, this is what you get: incomplete research, easily subject to abuse.

When we get down to it, we must admit that we know next to nothing about whether any of the educational experiments foisted on the children of Washington DC have done anything to help them.

Given this reality, I would respectfully suggest to anyone who wades into an analysis of Washington DC's schools that they stop and think very carefully about the implications of their research before publishing it. In the absence of good data and rigorous analysis, what you put out there matters even more than it would in places where the data are more comprehensive.

Caveat regressor.

ADDING: It's important to point out, as

Bruce Baker does here, that the NAEP gains in DC largely pre-date Rhee-form.

* They did use parent education as a proxy measure of SES in their Grade 8 analyses. But that measure is a self-reported, very rough categorical measure that breaks down parental education into: didn't finish high school, finished high school, had some education after high school, finished college. You go with the data you've got, but this measure is obviously quite crude.

** I first came across the term in Murnane and Willett's Methods Matter, which I highly recommend to anyone interested in quantitative methods in the social sciences.

*** No, DC is not a state. But it does have a population larger than Vermont and Wyoming.

**** To be fair, his opinion wasn't shared by everyone on the panel. Michael Feuer of GWU stated that he thought data collection and analysis had improved, even if it wasn't where it needed to be.