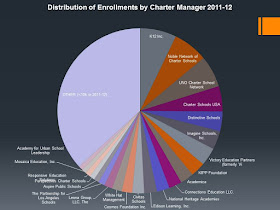

Let me show a pie chart Bruce Baker made that I keep coming back to:

This is from a dataset I made (with Bruce's guidance) of charter schools and their affiliated charter management organizations (CMOs). We hear a lot these days about KIPP or Uncommon or Success Academies; however, we hear much less about Academica or Charter Schools USA or White Hat. What do we really know about these schools?

Further: what about that "OTHER" category? Who runs these schools? How do they perform? How do they affect their local districts?

One goal I have over the next year at this blog is to spend some more time looking at these lesser-known charter schools -- the ones who, in reality, are the backbone of the charter sector. Let me start here in New Jersey with a story that doesn't involve a high profile charter leader like Eva Moskowitz or a high profile CMO like KIPP; however, it's a story that I believe is quite instructive...

* * *

Red Bank might be best known as the hometown of the great Count Basie. Like many small towns in New Jersey, it runs its own K-8 school district; high school students attend a larger "regional" high school that is fed my two other K-8 districts, Little Silver and Shrewsbury. The three districts are all quite small; when combined, their size is actually smaller than many other K-12 districts or regional high schools in the area.

This map from the National Center for Education Statistics shows the Red Bank Regional High School's total area, and the three smaller K-8 districts within it. You might wonder why the three districts don't consolidate; just the other day, NJ Senate President (and probable gubernatorial candidate) Steve Sweeney argued he'd like to do away with K-8 districts altogether. The estimates as to how much money would be saved are probably too high, but in this case it would still make a lot of sense.

The reality, however, is that these three K-8 districts are actually quite different:

Here are the free-lunch eligible rates for the three K-8 districts, and the regional high school. Red Bank students are far more likely to qualify for free lunch, a measure of economic disadvantage. Last year, Shrewsbury had one student who qualified for free lunch. It's safe to guess most of the high school's FL students came from Red Bank.

But I've also included another school: Red Bank Charter School. It's FL population is higher than Little Silver or Shrewsbury, but only a fraction of the FL population in Red Bank. What's going on?

Well, if you read the local press (via RedBankGreen.com), you'll see that this charter school is a huge source of controversy in the town:

That's a very strong claim. I'm not about to take it on, but I do think it's worth looking more closely at how the charter school's proposed expansion might affect Red Bank's future:With the first flakes of an anticipated blizzard falling outside, a hearing on a proposed enrollment expansion by the Red Bank Charter School was predictably one-sided Friday night.As expected, charter school Principal Meredith Pennotti was a no-show, as were the school’s trustees, but not because of the weather. They issued a statement earlier in the day saying they were staying way because the panel that called the hurry-up session should take more time in order to conduct “an in-depth analysis without outside pressure.”Less expected was district Superintendent Jared Rumage’s strongly worded attack of charter school data, which he said obscured its role in making Red Bank “the most segregated school system in New Jersey.”

This is, of course, the standard play by charter schools these days when confronted with the fiscal damage they do to their hosting districts: claim that they are actually helping, not hurting, their hosts. Julia Sass Rubin*, however, did a study of how Red Bank CS funding affects the local schools. What she points out -- and what seems to have been lost on the charter's spokespeople in their own presentation to their parents -- is that the charter gets less funding per pupil largely because it enrolls a different student population than the public district schools.The charter school proposal calls for an enrollment increase to 400 students over three years beginning in September. Supporters of the non-charter borough schools contend the expansion would “devastate” the district, draining it of already-insufficient funding, a claim that charter school officials and their allies disputed at a closed-door meeting Wednesday night.As a row of chairs reserved for charter school officials sat conspicuously empty, a standing-room crowd gathered in the middle school auditorium heard Rumage revisit familiar themes, claiming that the expansion plan filed with the state Department of Education on December 1 relies on outdated perceptions about the district.Continuing a battle of statistics that’s been waged for the past eight weeks, Rumage countered assertions made at a closed meeting Wednesday, where charter school parents were told the expansion would have no adverse impact on the district, and would in fact bolster the district coffers.

This is one of the great, untold secrets of NJ charter school funding: the amounts are weighted by the types of students you enroll. If a charter school takes a student who qualifies for free lunch, or is Limited English Proficient, or has a special education need, the charter gets more money than if it took a student who was not in those categories. That's only fair, as we know students who are at-risk or have a particular educational need cost more to educate.

Here are the special education classification rates for all schools in the Red Bank Regional HS area. Red Bank CS has, by far, the lowest classification rate of any district in the region. Of course they are going to get less funding; they don't need it as much as their host district, because their students aren't as expensive to educate. Further, by enrolling fewer special education students, they are concentrating those students in the Red Bank Borough Public Schools. Is this a good thing?

But that's not the only form of segregation that's happening:

In addition, there's one more very curious thing about this situation. There are, in fact, other areas in New Jersey with K-8 districts that feed into regional high schools, and those K-8 districts, like here, can have very different student populations. StateAidGuy points out a particularly interesting case in Manchester Regional High School: many students who attend K-8 school in North Haledon, a more affluent town than its other neighboring feeders, don't go on to the regional high school. The unstated reason is that parents in that town do not want their children attending high school with children from less-affluent districts; Jeff also notes the racial component to that situation.

But that's not the case for Red Bank Regional High School; in fact, the school attracts more students than those who graduate from its feeders!

These are the sizes of different student cohorts when they are in Grade 8 in the feeders, and then Grade 9 in the high school. The high school actually attracts more students from the area: it has popular vocational academies that can enroll students from other districts, and an extensive International Baccalaureate program.

So the notion that the largely white and more affluent families in Shrewsbury and Little Silver would be scared off by a three-district consolidation with Red Bank doesn't seem to have a lot of evidence to support it. The students already come together in the high school, and that appears to be working out well (at least as far as we can learn from the numbers).

Furthermore, the three towns are within a small geographic area, about 4 miles across. A centrally located school, particularly for the younger children, wouldn't be any further than a couple of miles away for families. It would be quite feasible to implement a "Princeton Plan" for the area; for example, all K-2 students would attend one school, 3-5 another, and 6-8 another.

But the Red Bank Charter School appears to be moving the area away from desegregation. If the expansion goes through, it's likely to make any chance at consolidation go away, because the Red Bank district is likely to become more segregated.

Again, the effects of consolidation on the budgets of the schools would probably be modest -- but the effects on desegregation could be enormous. New Jersey has highly segregated schools; this would be a real chance to undo some of that. But expanding a charter which serves a fundamentally a different student population is almost certain to make segregation in the Red Bank region more calcified.

And for what? In their application for expansion, Red Bank CS boasts about its higher proficiency rates than Red Bank Boro. But it's not hard to boost your test scores when you enroll fewer special need students and fewer students in economic disadvantage. What if you take into account the different student populations?

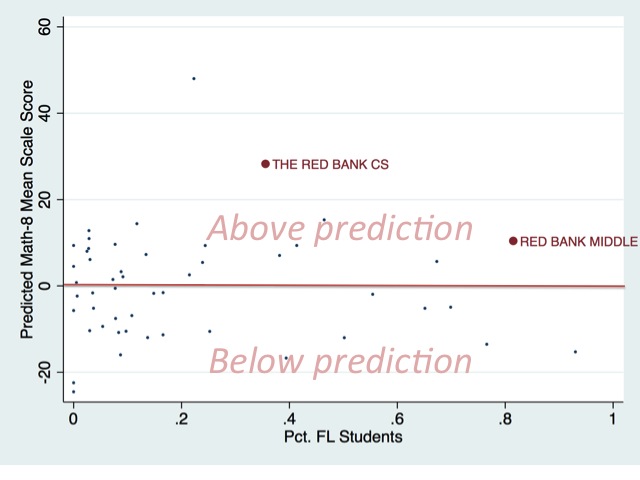

What I'm basically doing here is looking at all the schools in the county and, based on their scores and their students, creating a model that predicts where we would expect the school's average test score to be given its student population. Some schools will "beat prediction": they'll score higher than we expect. Some will score lower than prediction.

Let me be very clear on this: I would never suggest this is a comprehensive way to judge a school's effectiveness. I'm only saying that if you're going to make a case that a school should be allowed to expand based on its test scores, this is a far more valid approach than simply putting out numbers that are heavily influenced by student population characteristics.

Let's start with Grade 5 English Language Arts (ELA).

That's Red Bank Middle School in the upper right. About 76 percent of the variation in Grade 5 ELA scale scores in Monmouth County can be statistically explained by the percentage of FRPL students enrolled in each school. Red Bank Middle has one of the highest FRPL rates in the county, yet it does exceptionally well in getting test scores above where we'd predict they'd be based on its student population.

What about Grade 8?

For those with sharp eyes: I changed the x-axis to FL instead of FRPL (the model still uses FRPL). The charter does somewhat better than Red Bank Middle school; however, the public district school in Red Bank still beats prediction.

Here are the models for math:

Same thing: in Grade 5, Red Bank Middle beats Red Bank CS in adjusted scores. The reverse happens in Grade 8, but Red Bank Middle still beats prediction.

I always say this when I do these: absent any other information, I have no doubt that Red Bank CS is full of hard-working students and dedicated teachers; they should all be proud of their accomplishments. But it's clear that it's very hard to make the case that Red Bank CS is far and away superior to Red Bank Middle.

The Red Bank region has a chance to do something extraordinary: create a fully-integrated school district that serves all children well. I don't for a second believe that will be at all easy; we have plenty of research on the tracking practices, based on race and other factors, of schools that are integrated in name only.

But why turn down the chance to at least attempt something nearly everyone agrees is desirable in the name of "choice"? Especially when the "choice" is going to have a negative effect on the hosting school's finances? And when there's little evidence the "choice" is bringing a lot of extra value to its students to begin with?

Who knows -- maybe there's some way to have Red Bank CS be part of this. Maybe it can provide some form of "choice" to all students in the region. But not like this; all an expansion will do in this case is make it even harder to desegregate the area's schools. This is exactly the opposite of NJDOE Commissioner Hespe's mandate; can he honestly say there are benefits from expanding Red Bank CS that are worth it?

I wish I could say that what's happening in Red Bank is an isolated incident; it's not. Let's stay out in the NJ 'burbs for our next stop...

Stay strong, Red Bank!

(photo via RedBankGreen.com)

* Full disclosure: Julia and I co-wrote the NJ charter school report for the Tanner Foundation last year.

Richard Whitmire

Richard Whitmire